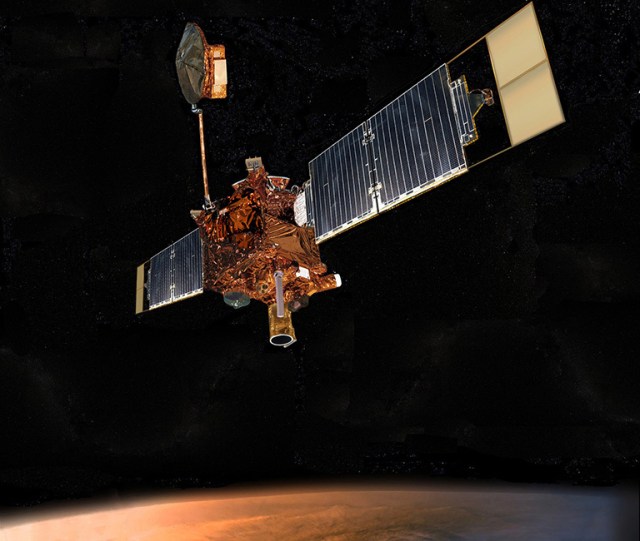

Mars Global Surveyor: A Decade of Discoveries on the Red Planet and Its Enduring Legacy in Space Science

Mars Global Surveyor was a revolutionary spacecraft that spent ten years orbiting the Red Planet, completely transforming scientists’ knowledge of Mars. By thoroughly examining the entire Martian surface, atmosphere, and…

The Smoking Pandemic: Why Libertarians Are on the Wrong Side of Science and Public Opinion

Smoking is a major public health issue that requires action, and libertarians are on the losing side of arguments regarding the merits of restricting it. The science behind the need…

President Biden Takes on Trump’s Lifestyle at IBEW Conference: How Biden is Winning Over Union Workers for 2022

During a conference for the International Brotherhood of Electrical Workers, a labor group that has endorsed his bid for reelection, President Biden spoke about his upbringing compared to that of…

PNW’s Nursing and Business Programs Recognized in U.S. News and World Report 2024 Rankings: Advancing Careers through Exceptional Education

Purdue University Northwest’s (PNW) graduate programs in Nursing and Business have been recognized in the prestigious U.S. News and World Report 2024 Best Graduate Program rankings. The Master of Science…

Nearly 10,000 Missiles and Guided Bombs Hit Ukraine in 2021

During a televised speech at the NATO-Ukraine Council meeting on April 19, President of Ukraine Volodymyr Zelensky requested that NATO provide at least 7 more Patriot air defense complexes or…

Georgia-Pacific Accelerates Manufacturing Decision-Making Using Gen AI

Georgia-Pacific, a manufacturer of paper goods products and parent company to brands like Quilted Northern, Brawny, Dixie, and Vanity Fair, is utilizing generative AI capabilities to enhance its manufacturing decision-making…

Jimmies men’s volleyball defeat Kansas Wesleyan in GPAC semifinals

The University of Jamestown, ranked No. 9, successfully defended their home court in the GPAC tournament with a sweep over Kansas Wesleyan University (25-15, 25-12, 25-18) on Friday, April 19,…

Empowering Communities: New York Cares Boosts 200 Volunteer Opportunities During National Volunteer Week

New York Cares is dedicated to providing more than 200 volunteer opportunities during National Volunteer Week from April 19-25. The organization aims to engage over one thousand volunteers to serve…

Rising Measles Cases and the Decline of Vaccination Rates in the US: A Concern for Public Health

In recent years, the anti-vax movement has gained traction in the United States, leading to a surge in measles outbreaks. These outbreaks have occurred in various states, prompting officials to…

Trump’s High Stakes in Truth Social: Will Investment Dilemma Derail Election Campaign?

As the US presidential election approaches, Donald Trump is turning to social network Truth Social in an attempt to raise funds for his campaign. However, the investment in this venture…