Global GDP Growth Drives Bullish Outlook for Oil Demand, but Inflation and Energy Shocks Could Keep Prices in Check

A Reuters poll among economists suggests that the global economy is likely to continue on a strong course, which is bullish for oil. However, some implications may keep prices range-bound.…

Bozeman’s Restaurant Week: A Time to Enjoy the Unique Flavors of Downtown

This April, Downtown Bozeman is hosting its annual Restaurant Week, featuring over 30 local eateries on Main Street. The event has been successful in attracting new customers and creating a…

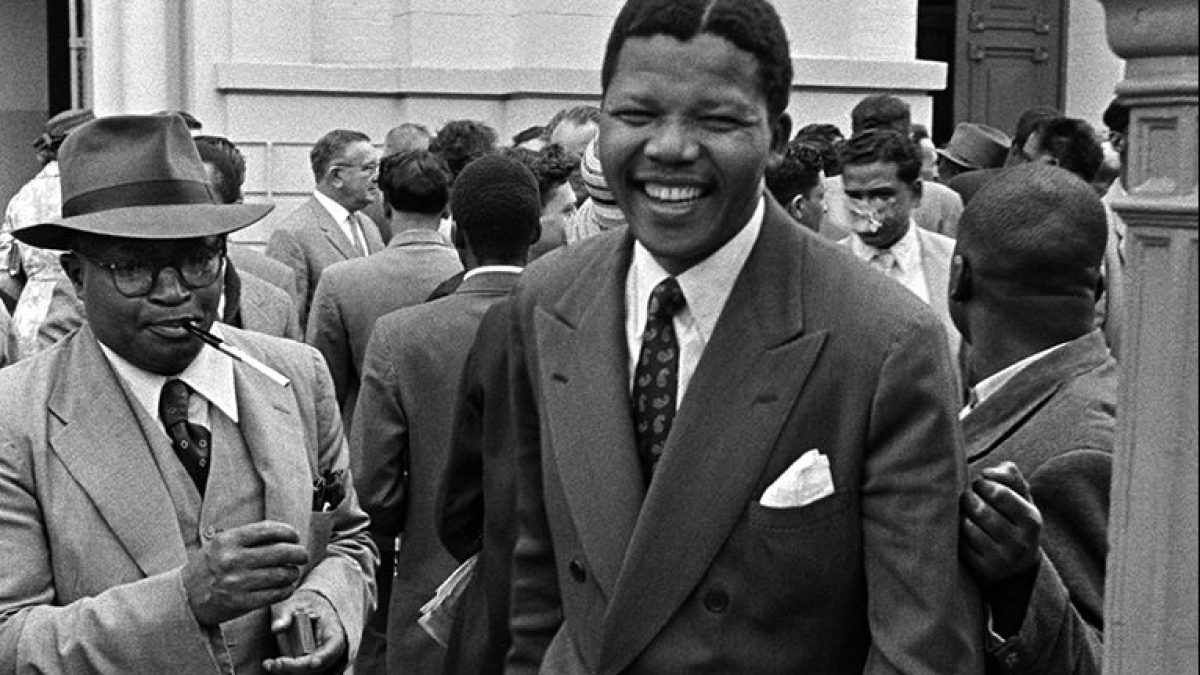

Remembering Mandela: A photographic look back at the era of apartheid in South Africa | Advocating for Human Rights

Photographer Jurgen Schadeberg (1931-2020) dedicated his life to documenting the struggle against apartheid in South Africa. Before his passing in 2020, Schadeberg shared some of his most iconic images and…

Mid-State Literacy Council is offering a technology class on accessing online prescription drug resources

St. Mark Lutheran Church in Howard will be hosting a free technology class on Tuesday, May 7 at 11 a.m. The class will focus on online prescription drug resources and…

Vote now for the NJ High School Game Changers Sports Awards 2024!

Voting is now open for the second-annual NJ High School Game Changers Sports Awards, scheduled to take place on June 17, 2024. To cast your vote, simply scroll to the…

Unleashing Their Potential: Celebrating Outstanding Leaders at 2024 Impact and Excellence Awards

The DoubleTree by Hilton was the venue for the 2024 Impact and Excellence Awards, where over 50 students, faculty, and staff were recognized for their outstanding contributions to the college…

HIPAA Gives Individuals Control Over Their Medical Records with MyWellSpan Portal”.

Individuals have the right to access their medical records and make changes to them under the Health Insurance Portability and Security Act (HIPAA). A convenient way to do this is…

Election Machine Reveals Which Candidates Align with Kauppalehti Readers’ Views Ahead of European Parliament Elections

The European Parliament election season is upon us, with elections taking place on June 9. In order to help voters make an informed decision, Kauppalehti has launched its election machine.…

The Double-Edged Sword of Hosting the NFL Draft: Insights from Local Business Owners in Kansas City

In 2023, Union Station in Kansas City was the site of the NFL Draft, bringing excitement to local businesses. Jayaun Smith, owner of Sauced, says that being part of the…

The government of South Korea held responsible for World Scout Jamboree disaster

The South Korean government has denied responsibility for the disastrous World Scout Jamboree last year, despite being blamed by investigators. Tens of thousands were evacuated from the campsite due to…